AI in Indie Horror and Simulation Games: Ethics, Bias, and the Future of Modding Alright, fellow PC gamers, settle in.

Alright, fellow PC gamers, settle in. We need to talk. We’re living in the age of AI, and it's not just about robots taking over the world (yet). It's creeping into our beloved indie games, particularly in genres like horror and simulation. As a veteran PC gamer who’s seen it all – from the pixelated horrors of Alone in the Dark to the sprawling universes of Civilization – I’m here to give you the straight dope on what this means for the games we love, and the ethical quagmire it's creating.

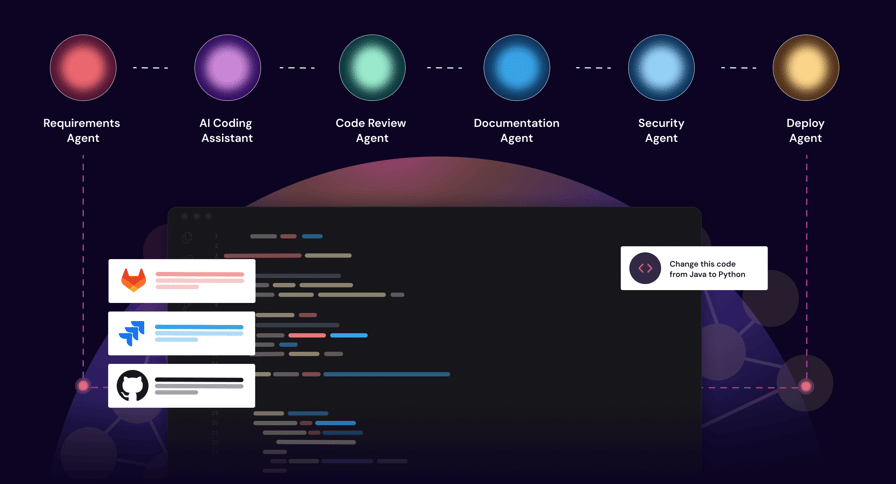

We’re talking about tools like Inworld AI, which promises dynamic character dialogue and emergent quests, and Layer AI, which lets devs crank out textures and materials faster than you can say "procedural generation." Sounds amazing, right? But before we get too hyped, let's dive deep into the potential pitfalls, especially concerning the training data used to power these AI overlords.

The Allure (and Danger) of AI in Indie Development

Indie developers are always looking for an edge. They often lack the massive budgets and manpower of AAA studios, so they’re turning to AI to level the playing field. Tools like Inworld AI and Layer AI offer tantalizing possibilities:

Inworld AI: Imagine playing Dread Hunger and encountering NPCs who react dynamically to your actions, offering unique quests based on your reputation and the unfolding events of the game. No more generic fetch quests! The potential for emergent gameplay is huge.

Layer AI: Modders working on RimWorld can now iterate on textures for weapons, clothing, and even alien species at lightning speed. Forget spending hours tweaking pixels – just feed the AI a prompt and boom, instant (potentially biased) content.

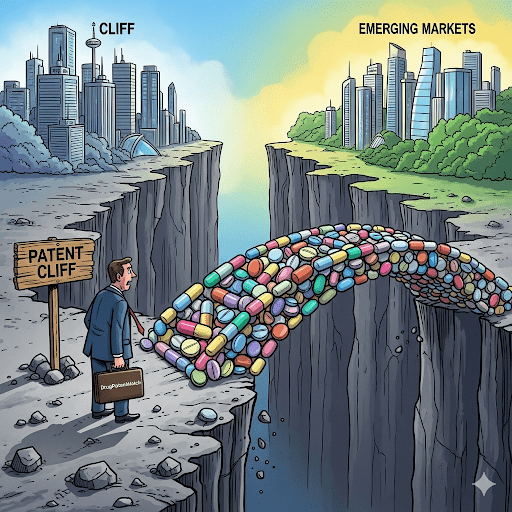

But here's the kicker: AI is only as good as the data it's trained on. And that's where things get ethically murky.

The Bias Behind the Bytes: Unpacking the Ethical Concerns

The biggest worry is bias. AI models are trained on massive datasets scraped from the internet, which often reflect existing societal biases related to gender, race, culture, and more. These biases can then seep into the AI-generated content, leading to problematic and offensive portrayals within our games.

Think about it:

- Character Dialogue: An AI trained on biased text data might generate dialogue for female characters in Dread Hunger that reinforces harmful stereotypes about women being weak or emotional.

- Texture Generation: Layer AI, fed a dataset with skewed representations of historical figures, might generate textures for Civilization VI that perpetuate inaccurate or offensive portrayals of certain cultures. Imagine a "warrior" texture for a Native American civilization based solely on Hollywood stereotypes. Yikes.

- Faction Creation: In Stellaris, imagine using AI to generate the traits and ideologies of a new alien civilization. If the AI's training data is biased, it might create a faction that is inherently xenophobic or militaristic, based on harmful stereotypes about real-world groups.

Modding Mayhem: Bias Amplified

Now, consider the modding scene. Civilization VI, Stellaris, and RimWorld are all heavily modded, meaning players can create and share their own content, further amplifying the potential for bias. Imagine a modder using Layer AI to create a new faction for RimWorld based on their own biased worldview. Suddenly, that bias is baked into the game, potentially affecting thousands of players.

I talked to a few modders about their experiences and concerns.

"It's tempting to use AI for texture creation," says 'GrimTech', a RimWorld modder. "It saves so much time. But I worry about where the AI gets its ideas. I don't want to accidentally create something that's offensive or perpetuates harmful stereotypes."

Another modder, 'CivFanatic', who works on Civilization VI mods, added, "Transparency is key. If a mod uses AI-generated content, the modder needs to be upfront about it and take responsibility for ensuring it's not biased."

Fighting the Good Fight: What Can PC Gamers Do?

So, what can we, the players, do to combat this? Here's some actionable advice:

Be a Critical Consumer: Question everything. When you encounter AI-generated content in a game, ask yourself: does this feel right? Does it reinforce any stereotypes? Does it accurately represent the group or culture it's portraying?

Reverse Image Search: Use tools like Google Reverse Image Search to track down the potential origins of AI-generated textures. If you find that a "medieval peasant" texture in a RimWorld mod is based on a stock photo of a historically inaccurate Hollywood costume, flag it for the modder and the community.

Text Analysis Tools: Use online tools to analyze AI-generated dialogue for biased language. These tools can flag phrases and terms that are commonly associated with prejudice or discrimination.

Support Transparent Modders: Seek out modders who are open about their use of AI and actively work to mitigate bias. Encourage them to document their process and the steps they take to ensure their content is ethical.

Engage in Community Discussion: Talk about these issues! Raise awareness within your gaming communities. The more we discuss the ethical implications of AI, the more likely we are to create a more inclusive and responsible gaming environment.

Demand Transparency from Developers: When purchasing a game, or using an AI tool, look for documentation on the datasets that were used to train the AI. If this information isn't readily available, contact the developers and ask. Public pressure for transparency is key to holding developers accountable.

The Future is Now: Embracing AI Responsibly

AI is here to stay. The key is to embrace it responsibly. We need to be vigilant about the potential for bias and actively work to mitigate it. This means demanding transparency from developers, supporting ethical modders, and engaging in critical consumption. It's a tall order, but the future of our favorite games – and the inclusivity of our gaming communities – depends on it. Let’s ensure that AI enhances our experiences, rather than perpetuating harmful stereotypes and biases. Now, if you'll excuse me, I have a RimWorld colony to manage… and a few textures to reverse-image search.